This user guide explains the Algorithm Impact Assessment (AIA) process and provides guidance on how to complete the AIA Questionnaire.

Purpose

Summary of key points

Acknowledgements

About the Algorithm Impact Assessment process

Overview of the AIA process

The Algorithm charter commitments

Every agency is unique

A note on terminology

Using this guide

Why is the AIA process necessary?

What is an algorithm?

When does the AIA process apply?

Who should be involved in the AIA process?

How should we conduct the AIA process?

Where can we find support?

Interim Centre for Data Ethics and Innovation

Data Ethics Advisory Board

Government Chief Privacy Officer

Government Chief Information Security Officer

Office of the Privacy Commissioner

AI Forum New Zealand

Completing the AIA Questionnaire

1. AIA details

2. Project information

3. Overall risk profile

4. Governance and human oversight

5. Partnership with Māori

6. Data

7. Privacy

8. Bias and other unfair outcomes

9. Algorithm development, procurement and monitoring

10. Safety, security and reliability

11. Community engagement

12. Transparency and explainability

Glossary

Appendix 1: DPUP principles

Appendix 2: References

Case studies

This Algorithm Impact Assessment User Guide forms part of the Algorithm Impact Assessment (AIA) process and documentation prepared by Stats NZ to help government agencies meet their commitments under the Algorithm Charter for Aotearoa New Zealand (the Charter).

Algorithm Charter for Aotearoa New Zealand

This User Guide explains the AIA process and provides guidance on how to complete the AIA Questionnaire. It is designed to support those working on algorithm projects with explanations, key considerations, case studies and links to additional materials that may be helpful.

Algorithm Impact Assessment Questionnaire – [DOCX 87KB]

The AIA process is designed to facilitate informed decision-making about the benefits and risks of government use of algorithms.

Like the Charter, the ultimate aim of the AIA process is to support safe and value-creating innovation by agencies. Adopting a responsible approach to the development and use of algorithms and AI systems is a key contributor to innovation rather than something that stifles or blocks it. That’s why the AIA process takes a risk-based approach intended to strike the right balance between ensuring agencies can use algorithms to provide better services, while still maintaining the trust and confidence of New Zealanders.

Conducting an Algorithm Impact Assessment will enable agencies to identify, assess and document any potential risks and harms of algorithms so they are in a better position to address them.

Following an introduction outlining the AIA process, this AIA Guide describes a best practice approach to the issues raised in the AIA questionnaire and helps you answer the questions in the AIA Questionnaire. That approach includes explanations, guidance, case studies, risk mitigation techniques and further reading suggestions for each of the following areas.

Stats NZ would like to thank Frith Tweedie from Simply Privacy for creating the Algorithm Impact Assessment process, designing the questionnaires, and compiling this user guide. Your skills and expertise have been invaluable.

We would also like to thank Katrine Evans (Government Chief Privacy Officer), Simon Anastasiadis (Principal Analyst, Stats NZ), Professor Alistair Knott (School of Engineering and Computer Science, Victoria University of Wellington), Joy Liddicoat (President of InternetNZ), the Office of the Privacy Commissioner, and Daniel Lensen (General Manager Client & Business Intelligence at Ministry of Social Development). Your insightful feedback has helped shaped these assessment tools.

This section looks at what the AIA process entails, why it’s important, when it applies, who should be involved, suggestions on how best to conduct the process and some key governing principles.

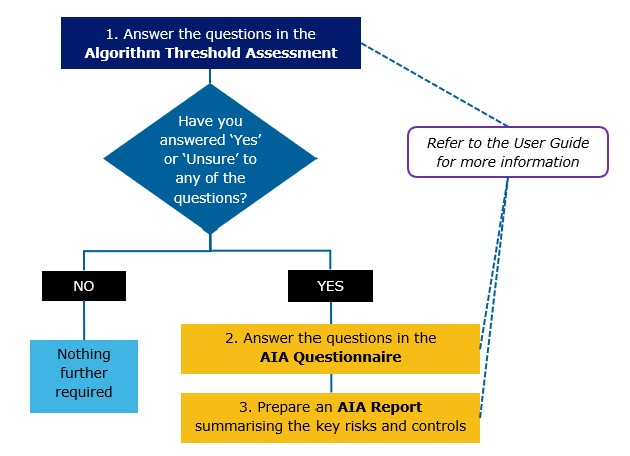

There are four components to the AIA process.

Algorithm Threshold Assessment - [DOCX 58KB]

AIA Questionnaire - [DOCX 87KB]

AIA Report Template - [DOCX 381KB]

The Charter comprises six key commitments by government agencies to demonstrate their understanding that decisions made using algorithms impact people in New Zealand. Those commitments are:

Algorithm charter for Aotearoa New Zealand

The AIA documentation adopts a best practice approach to satisfying the Charter commitments, recognising that each agency will need to tailor the process and the ultimate risk assessments in a way that is appropriate for its own context, risk profile and role in society.

As such, each agency is free to adopt these documents - or aspects of them - as best suits their needs.

Agencies that already have assessments in place covering many of the issues raised in the AIA process may only wish to borrow certain aspects from this documentation to supplement their own processes.

Others without anything similar in place may need to adopt the full process.

Please see the Glossary at the end of this User Guide for the key terms used throughout the AIA documentation – including the Algorithm Threshold Assessment - and their meaning within this context.

Although your algorithm project may involve more than one algorithm, the AIA documents refer to ‘an algorithm’ in the singular throughout for simplicity and consistency.

Similarly, while the AIA process should be conducted as early as possible, it can still be used for algorithms already in use. You will need to adjust the future tense references accordingly.

The User Guide should be read and used by:

This guidance will inevitably develop over time as technology evolves and project teams trial the process and discover its strengths and limitations.

Ongoing engagement with Māori and other communities and groups may also prompt further changes. It is therefore intended that this User Guide and the related AIA materials will be reviewed and updated on a regular basis.

We recommend ensuring you refer to the most recent version of the User Guide whenever you work on an AIA Questionnaire.

AIA Questionnaire - [DOCX 87KB]

Algorithms play an essential role in the work of New Zealand’s public sector by helping to streamline processes, improve efficiency and productivity, enable the faster delivery of more effective services and support innovation. Algorithms can also help to deliver new, innovative, and well-targeted policies to achieve government aims.

However, it’s well established that the opportunities afforded by new and evolving technologies can also introduce potential risk and harm. That includes challenges associated with accuracy, bias and a lack of transparency, explainability, reliability and accountability. At a societal level, poorly governed algorithmic and AI systems can amplify inequality, undermine democracy and threaten both privacy and security.

As AI tools incorporating algorithms become increasingly sophisticated and commonplace – including the explosion of Generative AI models such as ChatGPT – it is more important than ever that government agencies approach these technologies with due care and diligence.

A responsible approach to the development and use of data, algorithms, and AI, with clear accountability and risk management standards across the algorithm lifecycle will not only help produce better quality algorithms, it will also lead to higher levels of trust and help maintain the social licence of the agency using them.

This perspective is inherent in the Algorithm charter, a set of commitments made by government agency signatories to carefully manage how algorithms are used. The Charter was released in July 2020 to increase public confidence and visibility around the use of algorithms within the public sector.

The Charter does not provide a formal definition of ‘algorithm’ because the risks and benefits associated with algorithms tend to be contextual and are largely unrelated to the type of algorithm being used. Very simple algorithms can result in just as much benefit or harm as more complex ones depending on the context, focus and intended recipients of the outputs.

A broad definition of ‘algorithm’ is included in the Glossary as a high-level guide only, along with examples of the definitions adopted by some agencies.

The Algorithm Threshold Assessment will also help you to determine if the Charter applies and a full AIA is required. It contains a short list of questions designed to ‘weed out’ those algorithms that are unlikely to have a material impact on people and a corresponding risk of harm.

Algorithm Threshold Assessment - [DOCX 58KB]

Algorithm Threshold Assessments should be completed at the planning stage of a new or different algorithm. Where required, the AIA Questionnaire should also be initiated at an early stage, noting it may need to be worked on and updated throughout the development process.

The full AIA process – that is, using the AIA Questionnaire to produce an AIA Report - is designed to apply to higher risk algorithms only. It is not intended that every business rule or process used by agencies will be captured by this process.

The Algorithm Threshold Assessment replaces the assessment using the Risk Matrix set out in the Algorithm Charter. You should use the screening questions in the Algorithm Threshold Assessment to identify if the algorithm in question is likely to automate, aid, replace or inform any operational or policy decisions that are likely to have a ‘material impact’ on individuals, communities, or other groups.

The Algorithm Threshold Assessment also asks whether the algorithm uses any sensitive personal information or any form of artificial intelligence, including Generative AI, machine learning, facial recognition or other biometrics likely to have a ‘material impact’ on any individuals, communities or other groups.

A ‘material impact’ means a decision that could reasonably be expected to affect the rights, opportunities or access to critical resources or services of individuals, communities or other groups in a real and potentially negative or harmful way, or that could similarly influence a decision-making process with public effect.

Determining whether a material impact is likely to occur requires consideration of various factors, including:

For example, decisions affecting people’s legal, economic, procedural or substantive rights (including those relating to the administration of justice or democratic processes, human rights, and privacy rights) or those that could impact eligibility for, or access to services (including education, social welfare, health, housing, ACC or immigration services) are likely to have a material impact, while decisions likely to result in only minor or highly unlikely impacts will not be considered material.

Examples of algorithms likely to have a material impact and therefore provoke a “Yes” answer to the screening questions (triggering the need for completion of the AIA Questionnaire) include:

Examples of algorithms that are not likely to have a material impact include:

These examples demonstrate that the purpose for which an algorithm will be used and the wider context are key to determining the likelihood of some form of material impact. For example, the outcome is likely to be different for the image to text algorithm above if it was being used to digitise paper application forms for a government service. In that context, poor performance of the algorithm on some handwriting styles could influence success rates for individual applicants.

Accordingly, when completing the Algorithm Threshold Assessment, you should discuss whether a material impact is likely with a multi-disciplinary group, including those with privacy, legal and risk management expertise.

Algorithm Threshold Assessment - [DOCX 58KB]

If you tick “Yes” or “Unsure” to one or more of the questions in the Algorithm Threshold Assessment, you should complete the AIA Questionnaire and produce an AIA Report. The general rule of thumb is, if in doubt, please complete the full process.

AIA Questionnaire - [DOCX 87KB]

AIA Report Template - [DOCX 381KB]

Please note that the AIA documentation is not a complete list of all requirements for algorithm projects. Project teams should always ensure they comply with their agency-specific legal obligations, processes, risk management frameworks, policy requirements, and governance mechanisms.

Note that simply completing the AIA process does not mean that an algorithm has an acceptable risk profile and is fine to proceed. The final output of this process – the Algorithm Report – should identify and clearly articulate the relevant harms, risks and mitigants so appropriate decision makers in your agency can take accountability for the algorithm and whether it is acceptable or not to use it as intended.

Questions relating to the collection, use and sharing of data are relevant to both privacy law and ethical considerations associated with algorithms.

For example, data provenance and usage, accuracy, transparency, reliability, security and accountability are all key privacy considerations where personal information is involved – and you will find those core privacy concepts are embedded across all sections of the AIA Questionnaire and this User Guide.

Failure to appropriately identify and manage privacy risks can result in harm to individuals and creates legal and reputational risk for your agency. Accordingly, where the Project or the algorithm involves personal information, you must engage with your Privacy or Legal team to understand whether a Privacy Impact Assessment (PIA) is required either before or in parallel with the AIA process.

While the AIA addresses some algorithm-specific privacy considerations in the “Privacy” section towards the end of the document, it does not replace the need for a PIA.

Generative AI uses prompts or questions to generate text or images that closely resemble human-created content. Generative AI works by matching user prompts to patterns in training data and probabilistically “filling in the blank”. ChatGPT is the most well-known, free, example of Generative AI.

By enabling people to quickly and easily create new content, Generative AI offers many public service benefits, including greater productivity and faster, more efficient innovation.

However, it may also present a range of risks, including in relation to privacy, accuracy, security, Māori Data Governance, procurement and intellectual property rights. Generative AI algorithms also enable and scale the rapid creation of harmful content such as misinformation, revenge porn and media content intended to sow social discord. Moreover, because AI algorithms reflect the values and assumptions of their creators, they can perpetuate conscious and unconscious biases that lead to exclusion or discrimination.

As Generative AI is being integrated into many commonly used public sector tools, it is something that public servants will need to take pro-active steps to manage. Any public sector use should align with the ‘Initial advice on Generative Artificial Intelligence in the public service’ produced by data, digital, procurement, privacy and cyber security system leaders across the New Zealand public service (Joint System Leads tactical guidance on Generative AI). That includes developing an appropriate Generative AI policy for your agency and fully assessing and actively managing risks, including through the use of privacy impact and security risk assessments.

Initial advice on Generative Artificial Intelligence in the public sector

An AIA will be appropriate where the Generative AI is likely to have a material impact on any individuals, communities or other groups. This means that, for example, using a Generative AI tool like ChatGPT - or versions embedded in office software products - for relatively low-risk tasks such as helping to write an email will not require completion of the full AIA process.

However, where the use of Generative AI tools could reasonably be expected to significantly affect individuals, communities or other groups, particularly, where they are being used in circumstances where inaccuracy, bias, mis/disinformation present real risks, an AIA Questionnaire and AIA Report should be completed.

AIA Questionnaire - [DOCX 87KB]

AIA Report Template - [DOCX 381KB]

Please also see the Privacy Commissioner’s expectations around Generative AI and how to manage the potential privacy risks associated with using such tools.

Privacy Commissioner’s expectations on use of Generative AI

Artificial intelligence and the information privacy principles

The AIA process is best completed by involving a diverse and multi-disciplinary range of inputs from people with a wide range of knowledge, skills and experience. While the precise combination will depend on the nature of the algorithm, the agency and the Purpose, that could include the following roles:

Where a co-design approach is being used, you should also include community advocates.

Support from external advisers may also be appropriate, particularly for higher risk algorithms or where internal capability is not available.

Subject to the nature of the data and algorithm to be used, it’s likely you will need to engage with your agency’s internal privacy, security, and legal advisers. External advice may be needed for more high-risk projects or where internal capability is unavailable.

Please ensure you engage with these teams as early as possible to establish when and how they will need to be involved.

Your privacy team should be consulted to ensure the privacy impacts of algorithms using or processing personal information - or that otherwise impact individuals’ privacy rights - are identified, assessed, and mitigated.

A Privacy Impact Assessment (PIA) may be required, which will help identify actions and approvals required under privacy legislation and policy, including the Privacy Act, the Data Protection and Use Policy and other internal data-related policies applicable within your agency.

Data Protection and Use Policy

When completing an AIA, you should consult your legal team to identify and address any legal risks arising from the development, procurement or use of the proposed algorithm and wider system(s). Consultations should begin at the concept stage of a Project, prior to development or procurement.

The nature of the legal risks will depend on the design of the system (for example, the training data or model used), the context of the proposed usage and the nature of the outputs.

Your security team can advise on how best to ensure the algorithm and related data are kept secure and free from external or internal misuse or attack. They can perform a Security Impact Assessment, which is assumed to be completed in association with the questions in section 10: Safety, security and reliability of the AIA questionnaire.

Section 10: Safety, security and reliability

Engagement and collaboration with Māori and other communities impacted by the algorithm is key. See the further discussion in the sections Partnership with Māori and Community engagement relating to the Charter’s ‘Partnership’ and ‘People’ commitments.

Section 5: Partnership with Māori

Section 11: Community engagement

Algorithm Charter for Aotearoa New Zealand

The ‘People’ Charter commitment requires active engagement with people, communities and groups with an interest in algorithms and consultation with those likely to be impacted by their use.

The principle of Mahitahitanga in the Data Protection and Use Policy (DPUP) is also relevant here. This means working together to create and share valuable knowledge and involves including a wide range of people in projects or activities that collect or use people’s information. The principle also advocates for working with iwi and other Māori groups as Te Tiriti o Waitangi partners, ensuring they are involved in decisions about data and information issues that affect them.

Data Protection and Use Policy

Every agency and every AIA is different so there are no hard and fast rules as to who is best placed to be the author of the AIA documentation. However, the following roles would usually be involved in completing the documentation.

If there are any questions in the AIA Questionnaire that you’re unable to answer, please note this down in your answers, including why (for example, because relevant information is unavailable or the question can only be answered after certain things have occurred). Unanswered questions provide an answer in themselves and play a role in the risk profile of the algorithm in question. This should be reflected in the AIA Report.

Please ensure you use plain, clear and simple language when you populate the AIA documentation, avoiding jargon and technical terms where possible.

If the jargon or technical terms must be used, please clearly explain their meaning so a non-technical audience are able to understand the concepts.

Each agency will have its own way of doing things and its own governance and risk frameworks that will continue to apply. This User Guide does not aim to provide a prescriptive process, but rather to include some ideas on how you might want to approach the AIA process to get the best results.

Workshops can be an effective and efficient way for multi-disciplinary groups to brainstorm issues and surface a range of different perspectives, particularly where you need to:

There are various techniques that can be effective for brainstorming and information gathering in workshops. You many find a Lean Canvas approach helpful to start your design journey. This is a one-page model to guide teams through a series of defined steps, helping you to focus on the key aspects of your problem and how you might solve it in a responsible way.

While a Lean Canvas is typically used by entrepreneurs to brainstorm business models, the overall concept can be useful in the AIA context as well.

From Data to AI with the Machine Learning Canvas (Part 1)

Open Ethics Canvas

Ethics Canvas

We recommend holding at least one initial workshop as early as practical to gather and discuss the following information.

Where can we find support?

Definition of Impacted People

Section 12: Transparency and explainability

AIA Questionnaire - [DOCX 87KB]

Consider holding subsequent workshops to check on progress and, as a group, identify and brainstorm emerging risks and mitigants over the course of the Project. A workshop mid-way through the Project provides an opportunity for re-assessment and re-direction if needed.

A final workshop towards the end of a Project can help to confirm the algorithm appropriately satisfies the Purpose, is adequately resourced, delivers the anticipated benefits and does not cause material harm to individuals, communities or other groups.

You may find it helpful to include a good facilitator who understands the issues can keep participants focused and ensure the necessary discussion points are covered and key information is surfaced.

Trials, pilots and proofs of concept (POCs) can play an important role in developing and successfully deploying and using an algorithm. By adopting a smaller and narrower focus in the first instance, you can test and learn before moving to full-scale deployment.

This can help build confidence in the data and the algorithm before they “go live” and are used in ways that impact people. You might like to conduct research into the use of similar technology overseas or in other contexts, as well as running research on how the algorithm performs on a sample data set. This will also provide behavioural insights into how the algorithm may be used, which can enable trouble shooting and help you anticipate unintended consequences.

Note however that pilot projects are likely to be quite different from the development of algorithms at scale, since larger-scale projects may require time to make sure the right data is gathered, the appropriate use case is chosen and costly mistakes are not made while developing technological architecture.

It’s unlikely you will need to complete the AIA process for most POCs, though that will be context dependent (it may be prudent to complete the process for particularly high profile/high risk POCs). However, you may still find the questions in the AIA materials helpful when designing, conducting and reviewing your POC. Consideration of the relevant issues is also likely to assist in getting approval from decision makers to proceed with a more formal and wide-ranging project/initiative, as well as ensuring you will already have a solid base for completing the AIA process where required.

The Interim Centre for Data Ethics and Innovation (ICDEI) supports government agencies to maximise the opportunities and benefits from new and emerging uses of data, while responsibly managing potential risk and harms.

The ICDEI’s role is to raise awareness and help shape a common understanding of data ethics in Aotearoa New Zealand, while building a case for a wider mandate and a scaled-up work programme over time. It will work across a wide network of people and ideas, drawing on the knowledge and expertise within that network, including the Data Ethics Advisory Group.

Where other parts of the network are already undertaking work (like this Algorithm Impact Assessment), ICDEI’s role is to support, accelerate, and use the network to promote and disseminate the work.

The Government Chief Data Steward (GCDS) has convened a Data Ethics Advisory Group (DEAG) to help maximise the opportunities and benefits from new and emerging uses of data, while responsibly managing potential risk and harms. This group will enable government agencies to test ideas, policy and proposals related to new and emerging uses of data. It will also provide advice on trends, issues, areas of concern, and areas for innovation.

The DEAG consists of members with expertise across privacy and human rights law, ethics, innovative data use and data analytics, Te Ao Māori, technology, public policy, government interests in the use of data (social, economic, and environmental), Pasifika and community representation.

The Government Chief Privacy Officer (GCPO) leads an all-of-government approach to privacy to raise public sector privacy maturity and capability. The GCPO supports government agencies to meet their privacy responsibilities and improve their privacy practices.

The GCPO is responsible for providing leadership by setting the vision for privacy across government, building capability by supporting agencies to lift their capability to meet their privacy responsibilities, providing assurance on public sector privacy performance and engaging with the Office of the Privacy Commissioner and New Zealanders about privacy.

Government Chief Privacy Office

The Government Chief Information Security Officer (GCISO) is the government system lead for information security.

The role strengthens government decision-making around information security and supports a system-wide uplift in security practice.

The GCISO’s work includes coordinating the Government's approach to information security, identifying systemic risks and vulnerabilities, improving coordination between ICT operations and security roles, particularly around the digital government agenda, establishing minimum information security standards and expectations and improving support to agencies managing complex information security challenges.

Government Chief Information Security Officer

The Office of the Privacy Commissioner (OPC) works to develop and promote a culture in which personal information is protected and respected in New Zealand. It is an Independent Crown Entity that is funded by the state but which is independent of Government or Ministerial control.

OPC has a wide range of functions, including investigating complaints about breaches of privacy, building and promoting an understanding of the privacy principles, monitoring and examining the impact that technology has on privacy, developing codes of practice for specific industries or sectors, monitoring data matching programmes between government departments, inquiring into any matter where it appears that individual privacy may be affected, monitoring and enforcing compliance with the Privacy Act and reporting to government on matters affecting privacy, both domestic and international.

Office of the Privacy Commissioner

The Artificial Intelligence Forum of New Zealand (AI Forum) is a purpose-driven, not-for-profit, non-governmental organisation that is funded by members. It was founded in 2017 to bring together New Zealand’s community of AI technology innovators, end users, investors, regulators, researchers, educators, entrepreneurs and the interested public to work together to find ways to use AI to help enable a prosperous, inclusive and thriving future for our nation.

The AI Governance Working Group was established to provide thought leadership on the responsible governance of AI in Aotearoa and develop a curated set of frameworks, tools and approaches that meet the needs of New Zealand organisations. It is producing a toolkit on AI governance that includes tools, approaches and principles to help organisations operationalise responsible AI governance. It is also developing a list of AI Governance groups and experts in New Zealand, with details coming soon.

Artificial Intelligence Forum of New Zealand

The algorithm is likely to be part of a wider piece of work, so this section captures the overall name of that wider Project, which is then used throughout the rest of the AIA documentation, where relevant.

Enter the name, role and contact details for the key personnel involved in the Project, including the accountable Executive Sponsor (such as a Deputy Chief Executive or similar).

This section of the AIA Questionnaire captures key details about the Project to help contributors, reviewers and decision makers understand why the Project is being undertaken, what it involves and the expected impacts. This information is critical to informing subsequent questions.

Be sure to attach or link to any relevant documents or supplementary information about the algorithm and the wider Project, such as the business case, specifications, general project documentation, system architecture diagrams, data flow maps, user interface designs, user instructions and manuals, legal advice, privacy advice, Privacy Impact Assessments, security advice.

Clear identification and articulation of the issue or problem you are trying to solve is critical to ensuring you select the right algorithm or other approach to solving that issue.

When describing the problem or issue you are trying to solve, consider the following alongside the ‘status quo comparison’ relating to Question 2.3 discussed below under the heading Identifying other Project information.

‘Purpose’ is a key definition used throughout the AIA process.

It refers to how and why the algorithm helps achieve the objectives of the Project in the relevant business context. This is particularly important when considering accuracy, potential biases and other unfair outcomes, which tend to be highly contextual. Throughout the AIA process, you and your Project contributors should remain focused on why the algorithm is being used, what you are trying to achieve and how it will help you address the issue or problem articulated above.

A workshop with multi-disciplinary participants can be a good way to brainstorm and identify the full range of individuals, groups and communities who are likely to be impacted by the algorithm’s use (the impacted people). The following discussion prompts can be used to guide your discussion.

Who are the impacted people?

People who will be directly impacted by the algorithm. For example,

For these people, discussion prompts include:

People who will be indirectly impacted by the algorithm (those who are affected by the algorithm in a less obvious or immediate way). For example,

For these people, discussion prompts include:

People who will use or access the algorithm. For example,

For these people, discussion prompts include:

In particular, consider the potential impacts on the following groups.

Once you have identified the impacted people, you can then identify the potential benefits and impacts of using the algorithm for each type. This information can initially be recorded in a very simple table (like the one below) before being refined for inclusion in response to questions 2.5 and 2.6 in the AIA Questionnaire.

| WORKSHOP: Identifying Impacted People and potential impacts | ||

| Impacted People | Potential benefits | Potential harms |

| 1. | ||

| 2. [etc…] |

(Together, the |

|

Please note: clearly identifying the Impacted People and associated benefits and harms is an essential element of the AIA process - it is critical to understanding the potential impact of the algorithm on people and not just your agency.

Please succinctly provide sufficient detail to enable someone with no familiarity with the algorithm to understand what the algorithm does, where it comes from, and its role in the wider Project.

The following prompts are designed to help you provide an appropriate level of clarity. Question numbers from the AIA Questionnaire are also listed.

Diverse teams are better equipped to bring a range of perspectives that can help minimise potential bias and other unfair outcomes. Ideally, project teams will consist of people with a diverse range of skills, experiences, genders, ethnicities, ages, abilities and backgrounds, particularly for the team(s) preparing the relevant data and developing the algorithm.

You should ensure there are Māori involved in the Project with a view to ensuring Māori perspectives can be embedded in the design, development, testing and implementation of the algorithm (noting Māori also have a diversity of views and no one person, whānau, hapū, iwi or other Māori organisation or community can speak for all Māori).

If there are gaps in your diversity, it’s important to think about whether any particular perspectives are missing. If so, you may need to take additional steps at the community engagement stage to ensure those perspectives are incorporated into the Project.

To address the time-intensive process of finalising a customer’s tax each year, Inland Revenue implemented a new system to calculate an individual’s tax position where they are reasonably confident of that person’s income.

It uses an algorithm to complete a calculation on the customer’s behalf and issue an immediate refund or notice of outstanding tax.

This has made the tax return process much less onerous for many people, with a high proportion of taxpayers now having to do little or nothing when their tax return is due.

Source: Algorithm-Assessment-Report-Oct-2018.pdf

This section asks you to describe the best and worst-case scenarios that could arise from using the algorithm. These questions aim to start laying the groundwork for articulation of the key risks in the Algorithm Report.

AIA Report Template - [DOCX 381KB]

Describe the best-case scenario that could arise from use of the algorithm, including a description of:

Please describe the worst-case scenario(s) that could arise from use of the algorithm, including a description of what this might look like both when the system works as designed or intended and when the system fails or doesn’t work as designed or intended in some way.

To help you think about how these scenarios might play out in real life, for each scenario please describe the following:

Work and Income’s Youth Service (NEET), uses an algorithm to help identify school leavers at greater risk of long-term unemployment to proactively offer them qualification and training support. The algorithm considers factors shown to affect whether a young person may need support and produces risk indicator ratings to indicate the level of support that might be required. It also refers school leavers to NEET providers for assistance and determines funding.

Since 2012, a third of the more than 60,000 young people that have accepted assistance were offered the service through the algorithm. NEET has proved to be most effective for those with a high-risk rating, resulting in improved education achievements and less time on a benefit, compared with those who did not use the service.

Source: Algorithm-Assessment-Report-Oct-2018.pdf, page 14

Governance refers to the relationships, systems and processes within and by which authority is exercised and controlled.

It is ultimately about accountability and, in the context of algorithms and particularly AI systems, involves ensuring an appropriate framework of policies, practices and processes is in place to manage and oversee the use of algorithms and associated risks to ensure the use of these tools aligns with the organisation’s objectives, is developed and used responsibly and complies with applicable legal requirements. Governance of algorithms should be part of an agency’s overall governance framework.

Joint systems Leads tactical guidance on Generative AI

Record keeping facilitates reviews and the identification and rectification of issues. Higher-risk algorithms with the potential to have a significant adverse impact on people may need to be independently audited.

Forms of Generative AI such as OpenAI’s ChatGPT or Google’s Bard are known to confidently create and present inaccurate content, often referred to as their tendency to ‘hallucinate’ or make prediction errors. You will need to implement robust review and fact checking processes to ensure any inaccurate content is detected where such tools are used.

To facilitate auditing, ensure the development process and training data sources are well documented, log the algorithm’s processes and maintain an appropriate audit trail for any predictions or decisions made by the algorithm.

Human review and oversight can help ensure an algorithm is performing as expected and mitigate the risks of unfair outcomes.

Your answers to Question 4.5 of the AIA questionnaire should detail the nature of the relevant human review and oversight, including whether the algorithm will:

Generally speaking, the less oversight a human can exercise over an algorithm or AI system, the greater the need for more extensive testing and stricter governance.

Immigration New Zealand developed a triage system that includes software to assign risk ratings to visa applications to guide the level of verification required on each application.

The algorithm does not determine whether an application is approved or declined and an Immigration Officer still assesses and determines each application.

Use of the algorithm has increased consistency across visa processing offices, improved processing times, and allowed attention to be focused on higher-risk applications. This allows staff to identify new and emerging risks and see where risks are no longer present.

Source: Algorithm-Assessment-Report-Oct-2018.pdf (Internal Affairs, Stats NZ), page 17

While human review can help ensure an algorithm is performing as expected, this can be undermined where ‘automation bias’ occurs. ‘Automation bias’ refers to “the tendency to over-rely on automated outputs and discount other correct and relevant information”.

(Source: https://assets.publishing.service.gov.uk/media/5d7f6b2540f0b61ccdfa4b80/RUSI_Report_-_Algorithms_and_Bias_in_Policing.pdf, at p. 15).

The unquestioning acceptance of automated decisions and recommendations can lead to system errors being overlooked, potentially leading to harm.

You should aim to ensure an appropriate balance of machine and human decision-making and implement suitable safeguards to minimise the risks of automation bias, including:

A range of legal obligations may apply to use of the algorithm, including under the Privacy Act 2020, the Human Rights Act 1993, the New Zealand Bill of Rights Act 1990, the Copyright Act 1994, and agency and sector specific legislation, as well as administrative and public law principles and legislation.

Please talk to your legal team to identify and address any specific legal or regulatory concerns. That is particularly important for automated decision-making algorithms, where there may be statutory powers that cannot be delegated or fettered without parliamentary approval.

Please make sure any legal advice is attached to the completed AIA questionnaire.

Citizens are entitled to challenge decisions made about them by government agencies. This can also help to surface inaccuracies and unfair outcomes.

The ability to challenge an algorithm-based decision is inherently connected with transparency and explainability – people need meaningful explanations about how decisions affecting them have been made.

It may help to establish a process or ‘feedback loop’ for Impacted People to report potential vulnerabilities, risks or unfair outcomes. You should also have a manual or similar alternative process available in case the algorithm is not performing adequately.

Appropriate guidance and training should be provided to all staff who interact in a material way with the algorithm. That may include training on:

The US National Institute of Standards and Technology (NIST) provides guidance on AI governance in its AI Risk Management Framework. The ‘Govern’ function sets out the requirements for governance of an AI programme, including policies and procedures, accountability structures, diversity, culture and risk management.

NIST AI risk management framework

Govern function in AI risk management framework

The Ministry of Social Development’s Model Development Lifecycle provides a governance framework, including the need for clearly defined roles and responsibilities, approval gates, the recording of risks and the ownership of both controls and risks.

Ministry of Social Development’s Model Development Lifecycle

The Policy Risk Assessment questionnaire developed by the New Zealand Police includes a section on ‘Oversight and accountability’ that sets out requirements for individuals to certify that the technology can do what is claimed, the proposal has been assessed from privacy, security, te ao Māori, and ethical perspectives, and that the technology is explainable.

In Aotearoa New Zealand, the governance relationship between the Crown (government) and Māori is shaped by Te Tiriti o Waitangi. The Charter reflects the Crown’s commitment to honour Te Tiriti o Waitangi and ensure the use of algorithms is consistent with the articles and provisions in Te Tiriti.

The articles and provisions of Te Tiriti can be summarised as (but are not limited to):

The courts and Waitangi Tribunal have described Te Tiriti generally as an exchange of solemn promises about the ongoing relationships between the Crown and Māori, including the promise to protect Māori interests and allow for Māori retention of decision-making in relation to them.

To ensure algorithm use delivers clear benefits to iwi and Māori, government agencies need to build trust and form enduring relationships with iwi and Māori to:

New Zealand is also a signatory to the United Nations Declaration on the Rights of Indigenous Peoples (UNDRIP). UNDRIP is a comprehensive international human rights document on the rights of indigenous peoples. It covers a broad range of rights and freedoms, including the right to self-determination, culture and identity, and rights to education, economic development, religious customs, health and language.

United Nations Declaration on the Rights of Indigenous Peoples (UNDRIP)

To meet the Partnership commitment in the Charter you should:

Te ao Māori acknowledges the interconnectedness and interrelationship of all living and non-living things via spiritual, cognitive, and physical lenses. This holistic approach seeks to understand the whole environment, not just parts of it. (This definition comes from the Treaty of Waitangi/Te Tiriti and Māori Ethics Guidelines for: AI, Algorithms, Data and IOT.)

Treaty of Waitangi/Te Tiriti and Māori Ethics Guidelines for: AI, Algorithms, Data and IOT.

Māori are diverse in terms of interests, aspirations and needs. There is no one Māori world view but multiple te ao Māori perspectives.

Use what your organisation already has and knows. It’s important to coordinate engagement activities and not duplicate what others have already done to minimise the burden on iwi and Māori.

A Te Tiriti analysis will help you identify how the articles and principles apply to the development and use of the algorithm in your Project. The Policy Project Toolbox collates guidance on conducting this analysis – a key resource is the Cabinet Circular on Te Tiriti o Waitangi guidance.

The Policy Project Toolbox

Cabinet Office Circular CO(19)5: Te Tiriti o Waitangi/Treaty of Waitangi Guidance

When engagement with iwi and Māori is needed, ensure you engage early in your project – building trust and relationships needs time and space. Be conscious of timeframes – relationships should not exist solely for the duration of a project. Decide how you will sustain these relationships to move them from extractive and transactional to enduring and reciprocal. Consider whether decision-making will be shared and, if so, how. Share what actions have resulted from the input and contribution of Te Tiriti partners.

Follow Te Arawhiti’s engagement framework and guidelines to ensure you have appropriately identified Māori interests. Te Arawhiti’s resources include:

Crown engagement with Māori framework

Guidelines for engagement with Māori

Engagement strategy template

Principles for building closer partnerships with Māori

The Ngā Tikanga Paihere framework may also be helpful in encouraging you to be mindful of those potentially impacted by your algorithm and aid in developing a more holistic view that includes te ao Māori perspectives.

You may also want to check the Settlement Portal – Te Haeata, an online record of Treaty settlement commitments. This portal helps agencies and settled groups search for and manage settlement commitments.

Be aware of the overwhelming demand from government on iwi and Māori to engage and consult on issues of concern. This often happens without creating the conditions for engagement and consultation to take place in a way that works for these communities. Do not contribute to the overwhelming of iwi and hapū leaders and Māori experts.

If iwi and Māori say no, it doesn’t necessarily mean they’re not interested. It’s likely that they’re already participating in many other government kaupapa, or this isn’t a priority for them right now.

Te Kāhui Raraunga has published a Māori-Crown Co-design Continuum which identifies three main types of Māori-Crown co-design and two other design approaches where Māori and Crown design independently:

The Continuum can be used as a planning tool for Māori co-design initiatives, whether they are initiated by Māori, or Māori are invited by Crown agencies to co-design.

Māori Crown Co-design Continuum

Māori data is defined as data that is about, from or by Māori, and any data that is connected to Māori. This includes data about population, place, culture, environment and their respective knowledge systems. (This definition comes from Te Kahui Raraunga’s Iwi data needs paper.)

Māori data is not owned by any one individual, but is owned collectively by one or more whānau, hapū or iwi. Individuals’ rights (including privacy rights), risks and benefits in relation to data need to be balanced with those of the groups of which they are a part. (This definition comes from Te Mana Raraunga.)

Māori data sovereignty recognises that Māori data should be subject to Māori governance – the right of Māori to own, control, access and possess Māori data. Māori data sovereignty supports tribal sovereignty and the realisation of Māori and iwi aspirations. This definition comes from Te Mana Raraunga.)

For Māori, data is a taonga. Māori are and have been data designers, collectors and disseminators for generations. The form Māori have collected it in differs from the modern understanding of data, and the forms that data is collected and transmitted are closely interconnected with Māori mātauranga and ways of being. Data was and continues to be how Māori have continued their consciousness as Māori across time and distance. (This definition comes from Te Kahui Raraunga’s Iwi data needs paper.)

Māori recognise that Māori data contains a part of the people or natural environment that the data is about, no matter how anonymised the data is.

Algorithm use should contribute to improved outcomes for Māori.

Impacts on Māori cannot be fully identified from a non-Māori perspective, so consultation with Māori is essential.

(Source: Cabinet Office Circular CO(19)5)

Links to other helpful guidance:

The Charter’s Data commitment requires signatories to make sure data is fit for purpose by understanding its limitations and identifying and managing bias.

Data is the life blood of algorithms, so it’s critical to understand what training and production data will be used, how accurate and reliable it is and whether it is suitable to use in the Project.

Remember that data is a taonga (treasure) and of high value so it must be treated with respect, particularly where it relates to people and Māori in particular.

Using the right data in the right context can substantially improve decision making but the opposite is also true. A long-standing rule of data science is GIGO – Garbage in, Garbage out. Poor quality data can lead to inaccurate, unreliable and even harmful results, particularly where historical biases in data are not considered and addressed.

To help decision makers identify and understand any potential adverse impacts before relying on algorithmic insights or decisions, please clearly describe in your AIA questionnaire answers both the data that will be used to train the algorithm (training data) as well as the data that will be fed into the algorithm once it has been deployed (production data). Please make sure you provide answers in relation to both types of data.

Please explain the sources of your data, including who collected the information, from whom, when, where, how and for what purposes. If this information is unknown or unavailable, please be sure to state this and the reasons why it is unclear.

For personal information, consider whether the information was collected from individuals with their knowledge and consent. Even where consent is not be a legal requirement, you are more likely to have social licence to use that information if the relevant people have agreed to it being collected and used in the first place.

See the discussion on consent in A Path to Social Licence – Guidelines for Trusted Data Use published by the Data Futures Partnership.

Definition of social licence (Glossary)

A Path to Social Licence – Guidelines for Trusted Data Use

For example, personal information collected many years ago for a different purpose may not be legally available for you to use depending on your Project’s context and proposed use case. Please consult with your Privacy team in such situations.

When it comes to using data obtained from external parties, you should ensure you understand the source and method of data collection, particularly for training data. Sensitive personal information sourced from overseas may have consent requirements associated with collection (for example, information sourced from Australia, the UK and Europe) and you will need to have visibility of whether it was collected in accordance with the law. Again, please consult with your Privacy and Legal teams.

Algorithms used to detect skin cancer can operate more accurately than dermatologists.

However, research has shown that algorithms trained on images taken from people with light skin tones only might not be as accurate for people with darker skins and vice versa. This can arise due to underrepresentation in training datasets as well as inconsistencies in the devices used for image acquisition and selection.

Consider who owns your training and your production data and whether you have the appropriate rights to use that data in the Project. Note that, strictly speaking, no one “owns” personal information; rather you are a steward or custodian of that data and the Privacy Act 2020 governs how it is to be handled and protected.

You should pay particular attention to the ownership of data generated using Generative AI tools, which may not be clear cut. Please consult with your Legal team around any copyright or other intellectual property questions.

Will the data be stored in New Zealand or offshore (including in overseas data centres)? If the data includes personal information, offshore storage may require compliance with IPP 12 of the Privacy Act 2020 – discuss with your Privacy team.

For an algorithm to be effective, its training data must be representative of the communities to which it will be applied. This is particularly important for algorithms developed externally (for example, by a supplier) or trained on overseas data.

Make sure you have sufficient data to achieve the Purpose. Is any additional data required to improve accuracy and reduce the risk of unfair results?

If so, where will you get it from and how will it be obtained?

Statistical accuracy refers to the ability to produce a correct or true value relative to a defined parameter. In the context of an algorithm, that’s likely to reflect how closely an algorithm’s outputs match the correct labels in test data.

As discussed in the Ministry of Social Development’s (MSD) Data Science Guide for Operations (Data Science Guide) that forms part of its Model Development Lifecycle (MDL), the accuracy of an analytical model usually depends more on data preparation methods than on the model type or the model tuning procedures.

The Data Science Guide notes “it is inevitable that data will contain some errors”, including historical, systematic and random errors. It suggests that for each data variable used, the different sources of error should be considered, mitigated and documented.

It goes on to set out some of the key decisions that must be made in preparing the data are as follows (noting MSD’s comments that this isn’t an exhaustive prescription of how to prepare data).

The Data Science Guide for Operations notes that, as with most of the decisions made in building an algorithm, the answers to these questions rely on understanding the business objective. Accuracy needs to be considered alongside other criteria, including transparency, ease of implementation and the potential for bias – and trade-offs between the relevant criteria may be necessary.

Where this is the case, you should ensure potential trade-offs are considered by a multi-disciplinary team (including business owners and privacy and legal representatives) in the context of the use case, the algorithm’s contribution to the Purpose and the business context and any potential risks that may result from any such trade-offs.

Where you are proposing to use Generative AI, you should also consider and document how you will address the specific risks of so-called ‘hallucinations’. That is, the tendency of Generative AI to ‘make up’ information or return out-of-date, biased or misleading results.

As noted in the Joint System Leads tactical guidance on Generative AI, ensuring the accuracy of AI outputs is critical. It is essential that data used to train AI tools is of high-quality, for quality outputs. Cleansing, validating and quality-assuring data can help to ensure accuracy and reliability of outputs.

Joint System Leads tactical guidance on Generative AI

See also the discussion on Performance and testing in section 9 of this User Guide, which includes examples of some appropriate metrics for measuring accuracy and other performance issues.

Various techniques are available for documenting data used in algorithms and AI tools, such as:

FactSheets

Datasheets for Datasets

Model Cards for Model Reporting

5 things to know about AI model cards

Dataset Nutrition Labels

Reward Reports

For more detail on data preparation for use in algorithms and some of the key decisions to make where preparing data, see the Ministry of Social Development’s Data Science Guide for Operations that forms part of its Model Development Lifecycle (MDL).

The MDL is an open source set of documents intended to be used by other agencies. It consists of a User Guide, a Governance Guide and a Data Science Guide for Operations, all of which aim to provide decision makers with assurance that technical, legal, ethical and Te Ao Māori opportunities and risks are managed throughout an algorithm’s lifecycle.

Remember to attach or link to a copy of any Privacy Impact Assessment already conducted, as well as answering the questions in the AIA.

As noted in the introductory section About the Algorithm Impact Assessment process, privacy considerations are embedded throughout the AIA process, including in relation to questions of data collection, quality, security, accuracy, transparency and access.

Algorithm-specific privacy issues are raised in the Privacy section of the AIA Questionnaire, including some that might not automatically be considered in a standard Privacy Impact Assessment (PIA).

Algorithms that use large volumes of personal information – or particularly sensitive personal information – may require a separate PIA.

As there may be some cross-over between the work done in each of the AIA and PIA processes, you should speak to your Privacy team at the earliest opportunity. They can help you to determine the best risk assessment processes for the Project and which information is relevant in what context to avoid duplication and ensure consistency. This is particularly important in relation to risk descriptions and controls so as to avoid confusion.

Your Privacy team can also help you to understand the key privacy risks and mitigants, ensure a Privacy by Design approach is embedded across the Project and articulate privacy considerations in workshops within the AIA process. Early-stage workshops as discussed in the Project information section can also be a helpful starting point for privacy teams to gather project information to inform a PIA.

Where Generative AI tools are proposed, please refer to the following New Zealand public sector guidance, which will be updated as the technology evolves.

Guidance from the Office of the Privacy Commissioner on Generative Artificial Intelligence

System Leads tactical guidance on Generative AI

Biometric technologies enable the automatic recognition of people based on their biological or behavioural features, including their faces, eyes (iris or retina), fingerprints, voices, signatures, keystroke patterns, odours or gait.

The use of biometric technologies can present significant risks, including in relation to surveillance and profiling, bias and discrimination, and a lack of transparency and accuracy.

The Office of the Privacy Commissioner (OPC) has clearly stated that biometric information is personal information that is regulated by the Privacy Act 2020. It is currently exploring whether to establish a new biometrics Code of Practice pursuant to the Privacy Act and consultation is underway.

Biometrics and privacy – Privacy Commissioner

Project teams looking to use biometric technologies should ensure they are compliant if the Code of Practice comes into force. As always, please engage with your Privacy team at the earliest opportunity where biometric technologies are proposed as part of a Project.

The Kaitiakitanga principle in the Data Protection and Use Policy includes keeping data safe and secure and respecting its value, as well as acting quickly and openly if a privacy breach occurs.

Data Protection and Use Policy

The Ministry of Social Development’s Privacy, Human Rights and Ethics Framework (PHRaE) is a helpful tool for identifying and addressing privacy, human rights and ethical risks. It includes guidance documents relating to personal information, data security, transparency, bias, operational analytics, partnership and automated decision-making.

Privacy, Human Rights and Ethics Framework (PHRaE)

‘Unfair outcomes’ is the term used in the AIA questionnaire to refer to bias, discrimination and other unfair, unintended or unexpected outcomes. These unfair outcomes may occur for a variety of reasons, including as a result of historical data that reflects cultural biases or as a consequence of how the algorithm itself is developed or used. They can often have significant impacts as discussed in the article Can algorithms make decisions about our lives in an unbiased fashion?.

Can algorithms make decisions about our lives in an unbiased fashion?

Anyone subject to algorithmic decision-making by government agencies or courts in New Zealand has legal protection from discrimination under the New Zealand Bill of Rights Act 1990 and the Human Rights Act 1993.

Discrimination is unfair treatment based on the protected characteristics in the Human Rights Act 1993, which include sex, race, ethnic or national origin (including nationality or citizenship), disability, age and employment status.

It's important to note that bias leading to unfair outcomes can still occur even it does not meet the requirements for discrimination under the Human Rights Act.

Algorithms used by criminal justice systems across the United States to predict recidivism were found to be biased against Black people. Black defendants were more likely than white ones to be incorrectly judged as having a higher risk of re-offending.

Source: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

Bias can be defined as a systematic difference in the treatment of certain objects, people or groups in comparison to others. This can occur due to a range of factors, including:

Fairness: The related but distinct concept of ‘fairness’ deals with more than just the absence of bias. Fairness considerations typically relate to the overall outcomes of an algorithm, including the need for fair decisions to be reasonable, to consider equality implications, to respect personal agency and to not be arbitrary.

There are multiple perceptions of fairness and it is a highly conceptual and often ambiguous concept. This can be particularly challenging for algorithms, as fairness is not a notion with absolute and binary measurement.

It is therefore critical that human developers and users of algorithms decide on an appropriate definition of fairness for each Project and the specific context. The target outcomes and their trade-offs must be specified with respect for the relevant context.

The concept of equity is also important, particularly in a health context.

Amazon dropped an AI-powered recruitment tool to review CVs after it was found to penalise female job candidates.

The algorithm was trained to identify patterns in successful job applications at Amazon over the previous 10 years – the majority of whom were male. So even though the algorithm was not explicitly trained to look at gender, it taught itself to prioritise male candidates because of historical hiring biases.

Common categories of negative or unfair impacts or outcomes include the following types:

These types of harm are not mutually exclusive and an algorithm or wider Project can involve more than one type.

A Dutch court ordered the Dutch tax authority to stop using an algorithm to predict childcare benefit fraud due to breaches of human rights and privacy laws. After more than 20,000 families were wrongly accused of benefit fraud, the tax authority admitted that at least 11,000 people were singled out for special scrutiny because of their ethnic origin or dual nationality, fuelling longstanding allegations of systemic racism in the Netherlands. It was also criticised for a lack of appropriate checks and balances surrounding use of the algorithm.

Dutch scandal serves as a warning for Europe over risks of using algorithms

Source: Blackbox welfare fraud detection system breaches human rights, Dutch court rules

There are many different types of bias and they can be conscious or unconscious (that is, outside a person’s conscious awareness). See the definition of ‘Bias’ in the Glossary for an indication of some of the different types of bias, noting this is not an exhaustive list.

Given the many complex sources of bias and other unfair outcomes, it is not possible to completely remove bias or guarantee fairness. In fact, bias can have positive, neutral and negative effects and even may be desirable in some cases (for example, to correct for historical under-representation – see the surgery waitlist equity adjustor case study below).

What constitutes a negative effect will therefore depend on the context, business goals and the overall Purpose for using the algorithm and will need to be carefully considered by a multi-disciplinary team.

Those collaborating on an algorithmic project should clearly define and document what fairness means in that particular project, ensuring a diverse range of perspectives contribute to that definition.

Ethnicity is one of five criteria used in a Te Whatu Ora algorithm to prioritise waitlisted patients for surgery. The equity adjuster waitlist tool aims to reduce inequity in the health system by considering clinical priority, time spent on the waitlist, geographic location, ethnicity (specifically Māori or Pacific people) and deprivation level. Clinical need takes precedence.

Source: https://www.hinz.org.nz/news/642771/Auckland-algorithm-improves-equity-of-waitlists.htm

Avoiding bias and achieving fairness can involve having to consider trade-offs relating to the defined Purpose and competing priorities.

It is always important to balance performance metrics against the risk of unfair outcomes - you may want to consider monitoring a set of metrics (see further discussion in the Algorithm performance, testing and monitoring section) that balances performance across several dimensions. Transparency and documentation of priorities and underlying assumptions are essential.

Those designing and using algorithms need to be aware of the various ways in which unwanted bias can be introduced and then design, test and validate their systems to correct for potential unfair outcomes.

Even when information that may cause discrimination is not present in a dataset, it is still possible to discriminate by using ‘proxy variables’. These are often used where relevant data is not readily available or is difficult to measure.

Difficulties can arise where the data used could represent or correlate with sensitive attributes or prohibited grounds of discrimination. The exclusion of protected characteristics from training or input data does not guarantee that outcomes will not be unfair, since other variables could serve as close proxies for those characteristics. For example, a postcode could operate as a proxy for race.

A number of otherwise benign features can combine to become a proxy from which sensitive information may be inferred. AI systems are particularly efficient at identifying underlying patterns which may correlate to a protected attribute like ethnicity, and as a result could make predictions or decisions which create the risk of bias or discrimination.

Biometric technologies (biometrics) have the potential to produce biased or discriminatory outputs, particularly where training data is significantly different from production data. This can also be caused by the fact that systems designed to detect physical characteristics (for example, faces, fingerprints) may need to manage a wide range of variables than if detecting a uniform object like a swipe card.

If a biometric system detects the characteristics of a certain group less accurately than others, it’s likely to produce biased outcomes. This could result in discrimination against a particular group.

You may be able to identify unwanted bias at various points during the development and deployment of algorithms, including when:

You may be able to address unwanted bias when:

See also the section on Algorithm performance, testing and monitoring in this User Guide for examples of algorithm performance metrics.

Various tools are available to enable transparent reporting of algorithm provenance, usage and fairness-informed evaluation – see Risk mitigation options in the Data section of this User Guide.

ISO Standard SA TR ISO/IEC 24027:2022 on Bias in AI systems and AI aided decision making

The benefits of agencies developing algorithms in-house include greater visibility and control of training data and the algorithm itself, as well as easier oversight and clear lines of accountability. Agencies are able to develop an algorithm in line with their defined Purpose and are well positioned to clearly define and document the algorithm’s technical features and performance metrics.

Good practice includes recording the use of algorithms and AI systems in an internal inventory with accompanying information relating to its source, usage and basic technical details.

See also the discussion on open-source solutions below, which may also be relevant in projects involving the internal development of algorithms.

For more detail on algorithm selection and optimisation, see the Ministry of Social Development’s Data Science Guide for Operations that forms part of its Model Development Lifecycle.

As algorithms and AI tools become increasingly sophisticated, agencies are likely to seek to procure such tools from specialist external suppliers rather than developing them in-house. Third-party AI tools, including open-source models, supplier platforms and commercial APIs, are now commonplace and AI-as-a-Service is a growth trend involving the use of AI tools built by others in the cloud.

While there may be valuable cost savings and quality considerations that support a procurement approach to algorithms, the specific risk profile of externally sourced algorithms necessitates appropriate due diligence.

Agencies remain responsible for any harms or risks caused by third-party algorithms or AI tools.

The questions in the AIA Questionnaire are designed to support agencies to carefully consider their approach to procurement (including where algorithms may be provided free of charge). Further clarification of those questions is provided below.

AIA Questionnaire - [DOCX 87KB]

When completing the AIA Questionnaire, please attach or link to any relevant evidence provided by the selected supplier(s) to support any claims about their responsible and ethical approach to algorithms and related data. That includes details of whether the supplier’s governance and ethical positions align with New Zealand regulatory requirements (including the Privacy Act 2020), the Algorithm Charter and principles of open government.

As with the rest of the algorithm lifecycle, a multi-disciplinary approach to algorithm procurement is key to ensuring the selected algorithm is the best fit for the Purpose and key risks have been identified and addressed or accepted.

Please provide a clear description of exactly what you are procuring. For example, is the algorithm or application:

Ensure you are using robust criteria to evaluate potential suppliers that address the specific risks associated with algorithms (and AI applications in particular). For example:

To drive the right behaviours by prospective suppliers of algorithms and AI tools, agency procurement teams should consider placing contingencies on supplier access to public sector procurement opportunities. For example, including requirements for supplier to demonstrate compliance with Charter commitments and ensure the explainability and interpretability of algorithms (for example, by sharing test results and explanations). This should be backed up with equivalent contractual obligations.

To improve the optimisation of school bus route design, the Ministry of Education uses an algorithm to develop, standardise, automate, and maintain school bus routes.

Using licensed software, the algorithm calculates the most effective route for pick-up and drop-off of students, drawing on up-to-date information about road changes and speed limits. This has made bus travel more efficient for children and communities, led to significant efficiencies in planning time for bus routes and bus travel times, saved $20 million for taxpayers each year and reduced greenhouse gas outputs.

Source: Algorithm-Assessment-Report-Oct-2018.pdf (Internal Affairs, Stats NZ), page 12

As a general rule, you should aim to ensure your contracts with external suppliers include clear obligations, indemnities and liability positions to ensure appropriate allocation of risk between the parties.

You will need to work closely with your Procurement and Legal teams to achieve this. To the extent possible – and depending on the relative bargaining power of the parties – you should aim to incorporate the evaluation criteria outlined above into the supplier’s obligations and require the following binding obligations from suppliers.

Appropriate indemnities and liability positions aligned with the extent of risk.

If a supplier’s algorithm is trained on data collected overseas that is not representative of the New Zealand populations to which it will be applied, this could lead to biased or discriminatory outputs. For example, a facial recognition model trained on images from North American or Chinese populations is likely to struggle to accurately identify New Zealand populations.

You should therefore aim to get as much visibility as possible of the supplier’s training data sources so you can understand the potential for unfair outcomes and how those can be identified and addressed.

The reality is, however, that many suppliers will refuse to share this information for commercial confidentiality reasons. In addition, the relative bargaining power between the parties may be such that it is just not possible to get this information.

Where that is the case, you will need to consider the context and potential risks and clearly outline the issues in the AIA questionnaire, including why the supplier refuses to be transparent about their training data. In some instances, this may not result in significant risk or there may be scope to focus additional efforts on identifying and mitigating downstream harms to compensate for this lack of visibility. The acceptability of the risk profile will need to be decided on a case by case basis by the AIA decision maker with input from the Project team.

Generative AI tools are able to generate high-quality content extremely quickly and efficiently. However, there are various specific issues and risks to be aware of.

The exploited labor behind artificial intelligence

OpenAI used Kenyan workers on less than $2 per hour to make ChatGPT less toxic

Where Generative AI tools are proposed, please refer to the following New Zealand guidance, which will be updated as the technology evolves.

Guidance from the Office of the Privacy Commissioner on Generative Artificial Intelligence

Joint System Leads tactical guidance on Generative AI

Agencies using third-party generative AI-related tools should consider how best to address these issues, seeking appropriate legal, privacy and other advice as necessary.

Open-source software is designed to be freely available to the public for use, modification and distribution by anyone. Open-access Generative AI systems enable anyone to develop their own apps for free. The benefits of open-source solutions include greater transparency, encouraging innovation through open collaboration and increasing adoption by reducing barriers to entry.

There are also obvious benefits in being able to access these solutions for free, making them particularly attractive in the public sector and to other organisations with fewer resources to develop or procure algorithms and AI systems themselves.

The Joint System Leads tactical guidance on Generative AI sets out various risks associated with using open-source or open access Generative AI solutions. It recommends exercising caution when using open-source AI, including taking steps to assess the testing, maintenance and governance of open-source AI software to ensure it is secure, appropriate, of high quality, and properly supported over time.

Joint System Leads tactical guidance on Generative AI

It also recommends ensuring you are following government procurement rules when sourcing Generative AI tools, conducting market research on suppliers and their offerings and including specific commercial protections in supplier contracts (including for privacy, security and ethical risks, technology obsolescence, vendor lock-in, and reliance on third-party provided services/AI).

Note that many of these issues are also relevant when procuring “closed-source” solutions, particularly in relation to vendor lock-in and reliance on third-party provided services.

Data and privacy breach risks are likely to increase if the supplier has access to your production data, so please detail why any such access may be necessary and what security measures and other relevant controls will be implemented to protect that data from unauthorised access, use or disclosure.

Who will be responsible for those security measures and what contractual obligations have been placed on the supplier in relation to such access?